Info

Nothing is more valuable than intelligence. Luckily, inferencing, tuning and even training gigantic cutting-edge LLMs have become a commodity. Thanks to state-of-the-art, open-weight LLMs you can download for free, the only thing you need is suitable hardware. We are very proud to announce the world's first and only Nvidia GH200 Grace-Hopper Superchip-, B200 Blackwell, B300 Blackwell Ultra, Mi355X, GB200 Grace-Blackwell Superchip, GB300 Grace-Blackwell Ultra Superchip-powered supercomputers in quiet, handy and beautiful desktop form factors. They are currently by far the fastest AI desktop PCs in the world. We are very proud to be the only ones in the world to offer such extremly powerful systems. Our components are sourced only from the best and well-known manufacturers like Pegatron, ASUS, MSI, Asrock Rack, Gigabyte and Supermicro. If you are looking for a workstation for inferencing, fine-tuning and training of insanely huge LLMs, image and video generation and editing, as well as high-performance computing, we got you covered. We ship worldwide! Because of a recent US export rule change we can now ship GH200 and Mi325X to china (requires obtaining a license).

Example use case 1: Inferencing MiniMax M2.1 229B, Xiaomi MiMo V2 Flash 310B, ZAI GLM-4.7 358B, DeepSeek V3.2 Speciale 685B, Moonshot AI Kimi K2.5 1T ThinkingMiniMax M2.1 229B: https://huggingface.co/MiniMaxAI/MiniMax-M2.1Xiaomi MiMo V2 Flash 310B: https://huggingface.co/XiaomiMiMo/MiMo-V2-FlashZAI GLM-4.7 358B: https://huggingface.co/zai-org/GLM-4.7DeepSeek V3.2 Speciale 685B: https://huggingface.co/deepseek-ai/DeepSeek-V3.2-SpecialeMoonshot AI Kimi K2.5 1T Thinking: https://huggingface.co/moonshotai/Kimi-K2.5MiniMax M2.1 229B, Xiaomi MiMo V2 Flash 310B, ZAI GLM-4.7 358B, DeepSeek V3.2 Speciale 685B, Moonshot AI Kimi K2.5 1T Thinking are currently the most powerful open-source models by far and even match GPT 5.2, Claude 4.5 Opus and Gemini 3 Pro.MiniMax M2.1 229B with 4-bit quantization needs at least 130GB of memory to swiftly run inference! Luckily, GH200 has a minimum of 624GB, GB300 a minimum of 775GB. With GH200, MiniMax M2.1 229B in 4-bit can be run in VRAM only for ultra-high inference speed (significantly more than 100 tokens/s). With (G)B200 Blackwell, as well as (G)B300 Blackwell Ultra this is also possible for Moonshot AI Kimi K2.5 1T Thinking. With (G)B200 Blackwell and (G)B300 Blackwell Ultra you can expect signifcantly more than 100 tokens/s. If the model is bigger than VRAM you can only expect approx. 20-50 tokens/s. 4-bit quantization is the best trade-off between speed and accuracy, but is natively only supported by Blackwell. We recommend using vLLM and Nvidia Dynamo for inferencing.Example use case 2: Fine-tuning Moonshot AI Kimi K2.5 1T Thinking with PyTorch FSDP and Q-LoraTutorial: https://www.philschmid.de/fsdp-qlora-llama3The ultimate guide to fine-tuning: https://arxiv.org/abs/2408.13296Models need to be fine-tuned on your data to unlock the full potential of the model. But efficiently fine-tuning bigger models like Deepseek R1 0528 685B remained a challenge until now. This blog post walks you through how to fine-tune Deepseek R1 using PyTorch FSDP and Q-Lora with the help of Hugging Face TRL, Transformers, peft & datasets. Fine-tuning big models within a reasonable time requires special and beefy hardware! Luckily, GH200, B200 and GB300 are ideal for this task.Example use case 3: Generating videos with LTX-2, Mochi1, HunyuanVideo or Wan 2.2Ligthtricks LTX-2: https://huggingface.co/Lightricks/LTX-2Mochi1: https://github.com/genmoai/modelsTencent HunyuanVideo: https://aivideo.hunyuan.tencent.com/Wan 2.2: https://wan.video/LTX-2, Mochi1, HunyuanVideo and Wan 2.2 are democratizing efficient video production for all.Generating videos requires special and beefy hardware! Mochi1 and HunyuanVideo need 80GB of VRAM. Luckily, GH200, B200 and GB300 are ideal for this task. GH200 has a minimum of 144GB, GB300 a minimum of 288GB and B200 has a minimum of 1.5TB.Example use case 4: Image generation with Z Image, GLM Image, Qwen-Image-2512, Flux 2 dev, Hunyuan Image 3.0, HiDream-I1, SANA-Sprint or SRPO.Z Image: https://huggingface.co/Tongyi-MAI/Z-ImageGLM Image: https://huggingface.co/zai-org/GLM-ImageQwen-Image-2512: https://huggingface.co/Qwen/Qwen-Image-2512Flux 2 dev: https://huggingface.co/black-forest-labs/FLUX.2-devHunyuan Image 3.0: https://github.com/Tencent-Hunyuan/HunyuanImage-3.0HiDream-I1: https://github.com/HiDream-ai/HiDream-I1SANA-Sprint: https://nvlabs.github.io/Sana/Sprint/Tencent SRPO: https://github.com/Tencent-Hunyuan/SRPOZ Image, GLM Image, Qwen-Image-2512, Flux 2 dev, Hunyuan Image 3.0, HiDream-I1, SANA-Sprint or SRPO are the best image generators at the moment. And they are uncensored, too. SANA-Sprint is very fast and efficient. FLUX 2 dev requires approximately 34GB of VRAM for maximum quality and speed. For training the FLUX model, more than 40GB of VRAM is needed. SANA-Sprint requires up to 67GB of VRAM. Luckily, GH200 has a minimum of 144GB, GB300 a minimum of 288GB, B200 a minimum of 1.5TB.Example use case 5: Image editing with Qwen-Image-Edit-2511, FLUX.1-Kontext-dev, Omnigen 2, Nvidia Add-it, HiDream-E1 or ICEdit.Qwen-Image-Edit-2511: https://huggingface.co/Qwen/Qwen-Image-Edit-2511FLUX.1-Kontext-dev: https://bfl.ai/announcements/flux-1-kontext-devOmnigen 2: https://github.com/VectorSpaceLab/OmniGen2Nvidia Add-it: https://research.nvidia.com/labs/par/addit/HiDream-E1: https://github.com/HiDream-ai/HiDream-E1ICEdit: https://river-zhang.github.io/ICEdit-gh-pages/Qwen-Image-Edit-2511, FLUX.1-Kontext-dev, Omnigen 2, Add-it, HiDream-E1 and ICEdit are the most innovative and easy-to-use image editors at the moment. For maximum speed in high-resolution image generation and editing, beefier hardware than consumer graphics cards is needed. Luckily, GH200, B200 and GB300 excel at this task.Example use case 6: Video editing with ReCo, AutoVFX, Skyreels-A2, VACE or Lucy EditReCo: https://zhw-zhang.github.io/ReCo-page/AutoVFX: https://haoyuhsu.github.io/autovfx-website/SkyReels-A2: https://skyworkai.github.io/skyreels-a2.github.io/VACE: https://ali-vilab.github.io/VACE-Page/Lucy Edit: https://github.com/DecartAI/Lucy-Edit-ComfyUI/ReCo, AutoVFX, SkyReels-A2, VACE and Lucy Edit are the most innovative and easy-to-use video editors at the moment. For maximum speed in high-resolution video editing, beefier hardware than consumer graphics cards is needed. Luckily, GH200, B200 and GB300 excel at this task.Example use case 7: Deep Research with DR Tulu, MiroThinker, WebThinker or Tongyi DeepResearchDR Tulu: https://github.com/rlresearch/dr-tuluMiroThinker: https://huggingface.co/miromind-ai/MiroThinker-v1.5-235BWebThinker: https://github.com/RUC-NLPIR/WebThinkerTongyi DeepResearch: https://tongyi-agent.github.io/blog/introducing-tongyi-deep-research/DR Tulu, MiroThinker, WebThinker and Tongyi Deepresearch enable large reasoning models to autonomously search, deeply explore web pages, and draft research reports, all within their thinking process.The hardware requirements vary depending on the particular LLM of choice (see above).Example use case 8: AI assistant with OpenclawOpenclaw: https://openclaw.ai/ There are AI assistants like Openclaw that can help you manage things. They clear your inbox, send emails, manage your calendar, check you in for flights and much more.The hardware requirements vary depending on the particular LLM of choice (see above).Why should you buy your own hardware?"You'll own nothing and you'll be happy?" No!!! Never should you bow to Satan and rent stuff that you can own. In other areas, renting stuff that you can own is very uncool and uncommon. Or would you prefer to rent "your" car instead of owning it? Most people prefer to own their car, because it's much cheaper, it's an asset that has value and it makes the owner proud and happy. The same is true for compute infrastructure.Even more so, because data and compute infrastructure are of great value and importance and are preferably kept on premises, not only for privacy reasons but also to keep control and mitigate risks. If somebody else has your data and your compute infrastructure, you are in big trouble.Speed, latency and ease-of-use are also much better when you have direct physical access to your stuff.With respect to AI and specifically LLMs there is another very important aspect. The first thing big tech taught their closed-source LLMs was to be "politically correct" (lie) and implement guardrails, "safety" and censorship to such an extent that the usefulness of these LLMs is severely limited. Luckily, the open-source tools are out there to build and tune AI that is really intelligent and really useful. But first, you need your own hardware to run it on.

Example use case 1: Inferencing MiniMax M2.1 229B, Xiaomi MiMo V2 Flash 310B, ZAI GLM-4.7 358B, DeepSeek V3.2 Speciale 685B, Moonshot AI Kimi K2.5 1T ThinkingMiniMax M2.1 229B: https://huggingface.co/MiniMaxAI/MiniMax-M2.1Xiaomi MiMo V2 Flash 310B: https://huggingface.co/XiaomiMiMo/MiMo-V2-FlashZAI GLM-4.7 358B: https://huggingface.co/zai-org/GLM-4.7DeepSeek V3.2 Speciale 685B: https://huggingface.co/deepseek-ai/DeepSeek-V3.2-SpecialeMoonshot AI Kimi K2.5 1T Thinking: https://huggingface.co/moonshotai/Kimi-K2.5MiniMax M2.1 229B, Xiaomi MiMo V2 Flash 310B, ZAI GLM-4.7 358B, DeepSeek V3.2 Speciale 685B, Moonshot AI Kimi K2.5 1T Thinking are currently the most powerful open-source models by far and even match GPT 5.2, Claude 4.5 Opus and Gemini 3 Pro.MiniMax M2.1 229B with 4-bit quantization needs at least 130GB of memory to swiftly run inference! Luckily, GH200 has a minimum of 624GB, GB300 a minimum of 775GB. With GH200, MiniMax M2.1 229B in 4-bit can be run in VRAM only for ultra-high inference speed (significantly more than 100 tokens/s). With (G)B200 Blackwell, as well as (G)B300 Blackwell Ultra this is also possible for Moonshot AI Kimi K2.5 1T Thinking. With (G)B200 Blackwell and (G)B300 Blackwell Ultra you can expect signifcantly more than 100 tokens/s. If the model is bigger than VRAM you can only expect approx. 20-50 tokens/s. 4-bit quantization is the best trade-off between speed and accuracy, but is natively only supported by Blackwell. We recommend using vLLM and Nvidia Dynamo for inferencing.Example use case 2: Fine-tuning Moonshot AI Kimi K2.5 1T Thinking with PyTorch FSDP and Q-LoraTutorial: https://www.philschmid.de/fsdp-qlora-llama3The ultimate guide to fine-tuning: https://arxiv.org/abs/2408.13296Models need to be fine-tuned on your data to unlock the full potential of the model. But efficiently fine-tuning bigger models like Deepseek R1 0528 685B remained a challenge until now. This blog post walks you through how to fine-tune Deepseek R1 using PyTorch FSDP and Q-Lora with the help of Hugging Face TRL, Transformers, peft & datasets. Fine-tuning big models within a reasonable time requires special and beefy hardware! Luckily, GH200, B200 and GB300 are ideal for this task.Example use case 3: Generating videos with LTX-2, Mochi1, HunyuanVideo or Wan 2.2Ligthtricks LTX-2: https://huggingface.co/Lightricks/LTX-2Mochi1: https://github.com/genmoai/modelsTencent HunyuanVideo: https://aivideo.hunyuan.tencent.com/Wan 2.2: https://wan.video/LTX-2, Mochi1, HunyuanVideo and Wan 2.2 are democratizing efficient video production for all.Generating videos requires special and beefy hardware! Mochi1 and HunyuanVideo need 80GB of VRAM. Luckily, GH200, B200 and GB300 are ideal for this task. GH200 has a minimum of 144GB, GB300 a minimum of 288GB and B200 has a minimum of 1.5TB.Example use case 4: Image generation with Z Image, GLM Image, Qwen-Image-2512, Flux 2 dev, Hunyuan Image 3.0, HiDream-I1, SANA-Sprint or SRPO.Z Image: https://huggingface.co/Tongyi-MAI/Z-ImageGLM Image: https://huggingface.co/zai-org/GLM-ImageQwen-Image-2512: https://huggingface.co/Qwen/Qwen-Image-2512Flux 2 dev: https://huggingface.co/black-forest-labs/FLUX.2-devHunyuan Image 3.0: https://github.com/Tencent-Hunyuan/HunyuanImage-3.0HiDream-I1: https://github.com/HiDream-ai/HiDream-I1SANA-Sprint: https://nvlabs.github.io/Sana/Sprint/Tencent SRPO: https://github.com/Tencent-Hunyuan/SRPOZ Image, GLM Image, Qwen-Image-2512, Flux 2 dev, Hunyuan Image 3.0, HiDream-I1, SANA-Sprint or SRPO are the best image generators at the moment. And they are uncensored, too. SANA-Sprint is very fast and efficient. FLUX 2 dev requires approximately 34GB of VRAM for maximum quality and speed. For training the FLUX model, more than 40GB of VRAM is needed. SANA-Sprint requires up to 67GB of VRAM. Luckily, GH200 has a minimum of 144GB, GB300 a minimum of 288GB, B200 a minimum of 1.5TB.Example use case 5: Image editing with Qwen-Image-Edit-2511, FLUX.1-Kontext-dev, Omnigen 2, Nvidia Add-it, HiDream-E1 or ICEdit.Qwen-Image-Edit-2511: https://huggingface.co/Qwen/Qwen-Image-Edit-2511FLUX.1-Kontext-dev: https://bfl.ai/announcements/flux-1-kontext-devOmnigen 2: https://github.com/VectorSpaceLab/OmniGen2Nvidia Add-it: https://research.nvidia.com/labs/par/addit/HiDream-E1: https://github.com/HiDream-ai/HiDream-E1ICEdit: https://river-zhang.github.io/ICEdit-gh-pages/Qwen-Image-Edit-2511, FLUX.1-Kontext-dev, Omnigen 2, Add-it, HiDream-E1 and ICEdit are the most innovative and easy-to-use image editors at the moment. For maximum speed in high-resolution image generation and editing, beefier hardware than consumer graphics cards is needed. Luckily, GH200, B200 and GB300 excel at this task.Example use case 6: Video editing with ReCo, AutoVFX, Skyreels-A2, VACE or Lucy EditReCo: https://zhw-zhang.github.io/ReCo-page/AutoVFX: https://haoyuhsu.github.io/autovfx-website/SkyReels-A2: https://skyworkai.github.io/skyreels-a2.github.io/VACE: https://ali-vilab.github.io/VACE-Page/Lucy Edit: https://github.com/DecartAI/Lucy-Edit-ComfyUI/ReCo, AutoVFX, SkyReels-A2, VACE and Lucy Edit are the most innovative and easy-to-use video editors at the moment. For maximum speed in high-resolution video editing, beefier hardware than consumer graphics cards is needed. Luckily, GH200, B200 and GB300 excel at this task.Example use case 7: Deep Research with DR Tulu, MiroThinker, WebThinker or Tongyi DeepResearchDR Tulu: https://github.com/rlresearch/dr-tuluMiroThinker: https://huggingface.co/miromind-ai/MiroThinker-v1.5-235BWebThinker: https://github.com/RUC-NLPIR/WebThinkerTongyi DeepResearch: https://tongyi-agent.github.io/blog/introducing-tongyi-deep-research/DR Tulu, MiroThinker, WebThinker and Tongyi Deepresearch enable large reasoning models to autonomously search, deeply explore web pages, and draft research reports, all within their thinking process.The hardware requirements vary depending on the particular LLM of choice (see above).Example use case 8: AI assistant with OpenclawOpenclaw: https://openclaw.ai/ There are AI assistants like Openclaw that can help you manage things. They clear your inbox, send emails, manage your calendar, check you in for flights and much more.The hardware requirements vary depending on the particular LLM of choice (see above).Why should you buy your own hardware?"You'll own nothing and you'll be happy?" No!!! Never should you bow to Satan and rent stuff that you can own. In other areas, renting stuff that you can own is very uncool and uncommon. Or would you prefer to rent "your" car instead of owning it? Most people prefer to own their car, because it's much cheaper, it's an asset that has value and it makes the owner proud and happy. The same is true for compute infrastructure.Even more so, because data and compute infrastructure are of great value and importance and are preferably kept on premises, not only for privacy reasons but also to keep control and mitigate risks. If somebody else has your data and your compute infrastructure, you are in big trouble.Speed, latency and ease-of-use are also much better when you have direct physical access to your stuff.With respect to AI and specifically LLMs there is another very important aspect. The first thing big tech taught their closed-source LLMs was to be "politically correct" (lie) and implement guardrails, "safety" and censorship to such an extent that the usefulness of these LLMs is severely limited. Luckily, the open-source tools are out there to build and tune AI that is really intelligent and really useful. But first, you need your own hardware to run it on.

What are the main benefits of GH200 Grace-Hopper and GB300 Grace-Blackwell Ultra?They have enough memory to run and tune the biggest LLMs currently available.Their performance in every regard is almost unreal (up to 10,000x faster than x86).There are no alternative systems with the same amount of memory.Optimized for memory-intensive AI and HPC performance.Ideal for AI, especially inferencing and fine-tuning of LLMs.Connect display and keyboard, and you are ready to go.Ideal for HPC applications like, e.g. vector databases.You can use it as a server or a desktop/workstation.Easily customizable, upgradable and repairable.Privacy and independence from cloud providers.Cheaper and much faster than cloud providers.Reliable and energy-efficient liquid cooling.Flexibility and the possibility of offline use.Gigantic amounts of coherent memory.No special infrastructure is needed.They are very power-efficient.The lowest possible latency.They are beautiful.Easy to transport.CUDA enabled.Very quiet.Run Linux. What is the difference to alternative systems?

What is the difference to alternative systems?

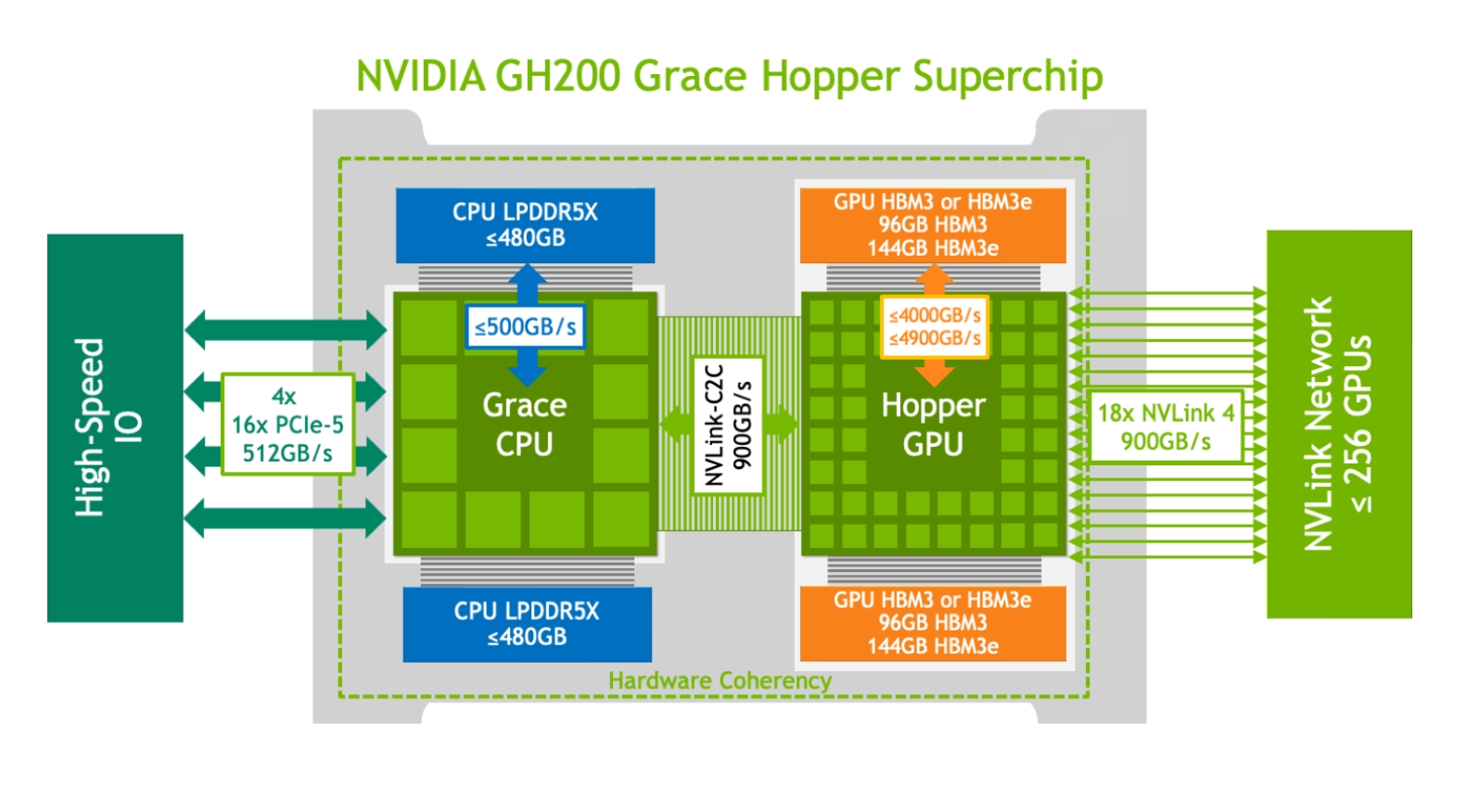

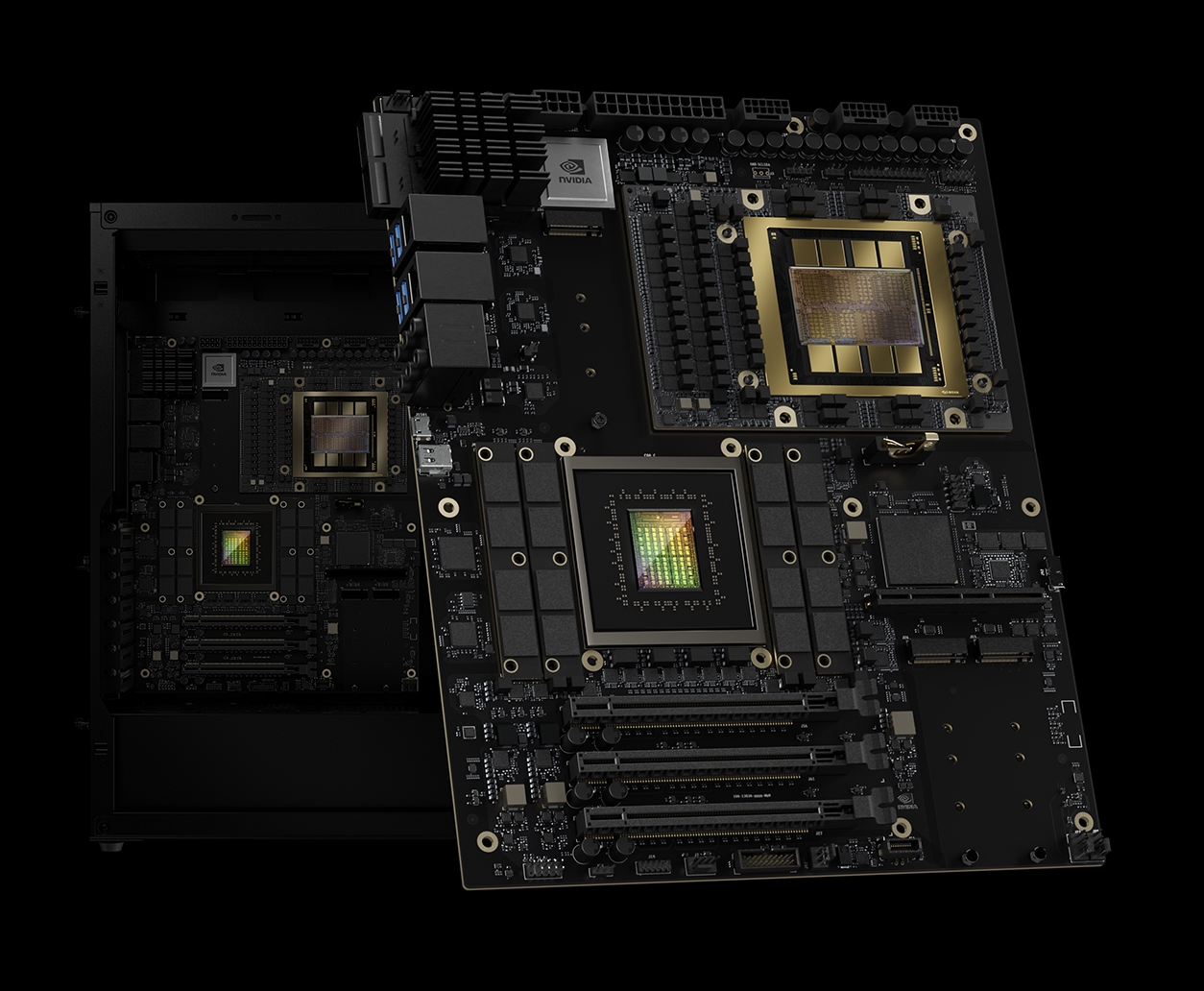

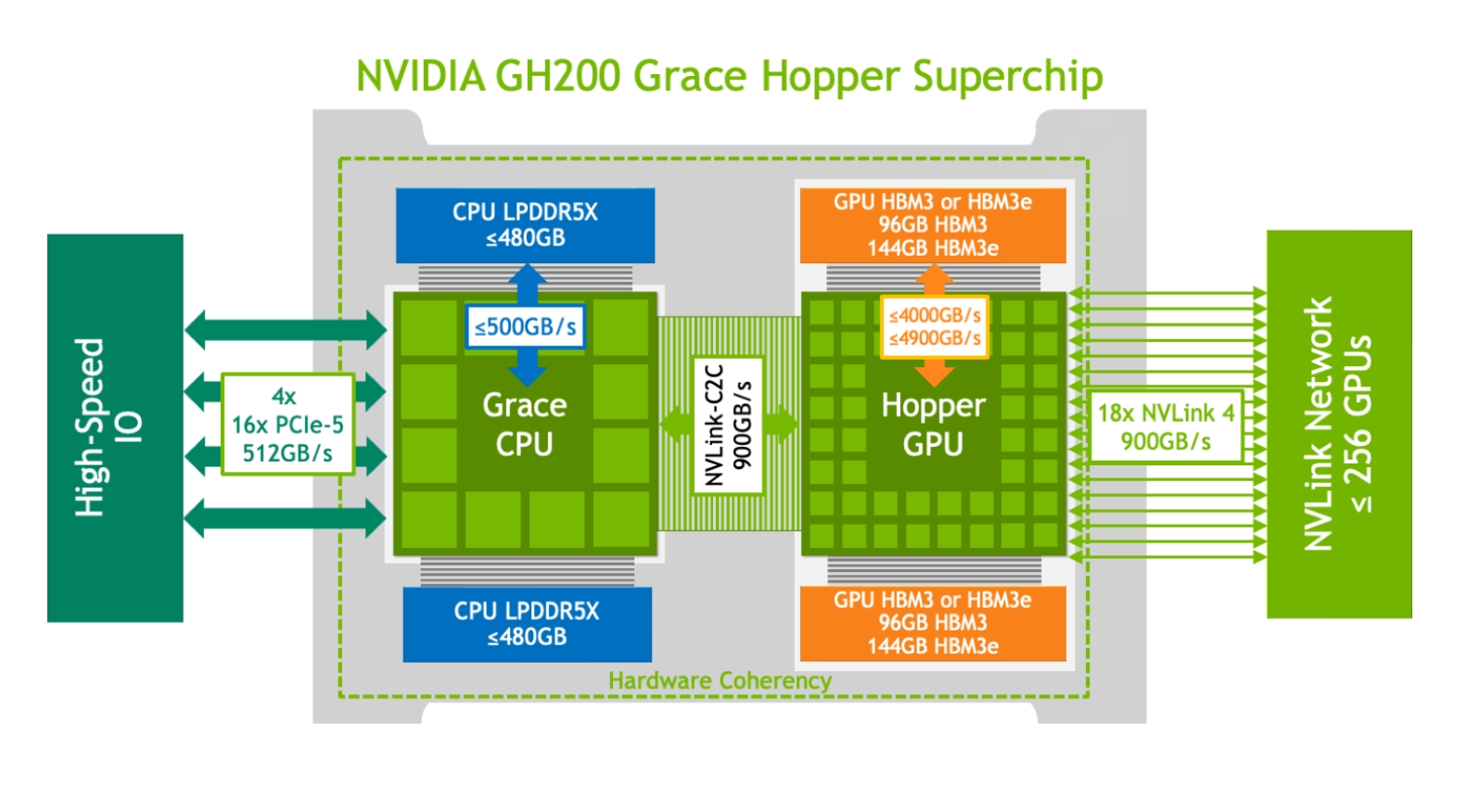

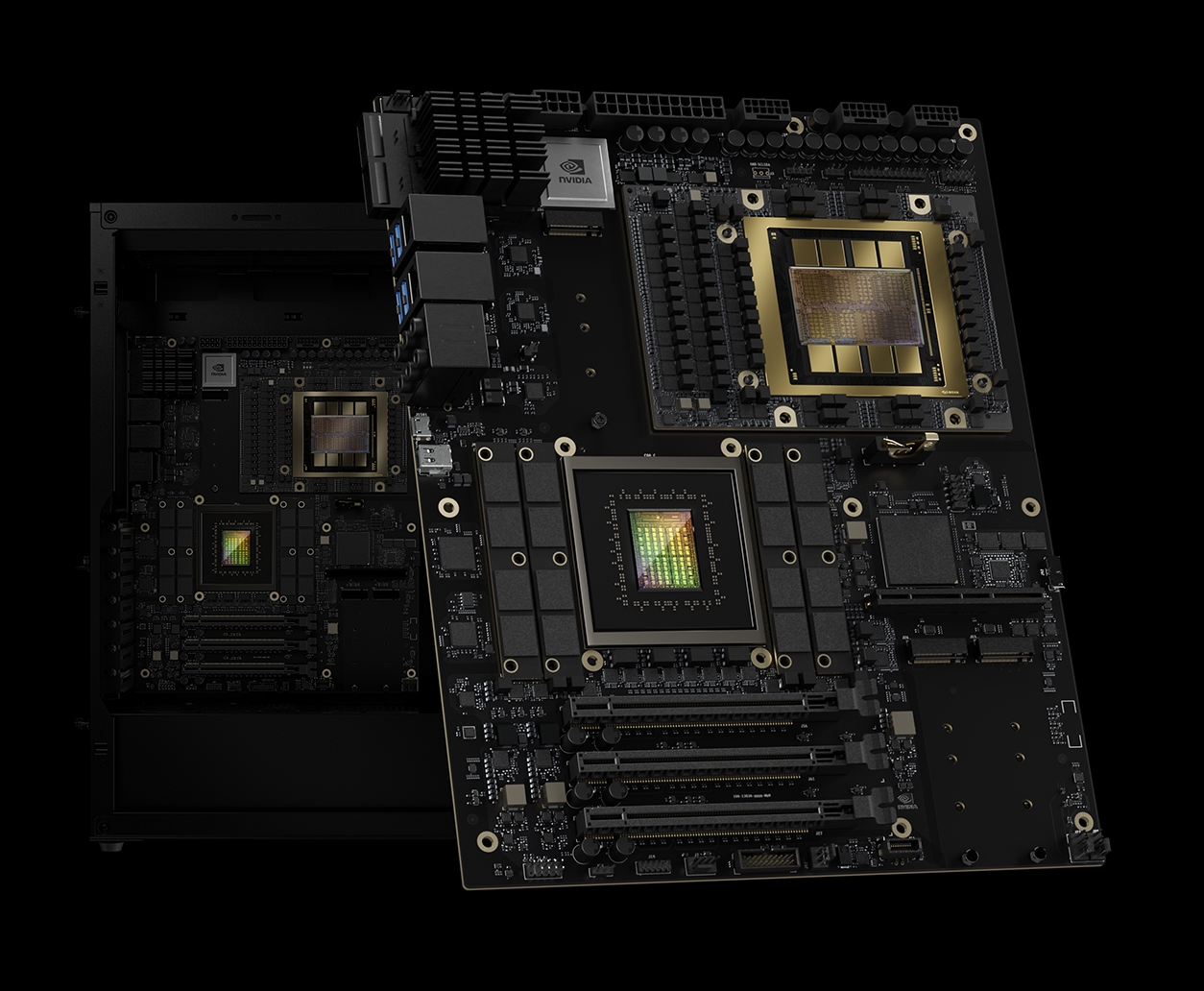

The main difference between GH200/GB300 and alternative systems is that with GH200/GB300, the GPU is connected to the CPU via a 900 GB/s chip-2-chip NVLink vs. 128 GB/s PCIe gen 5 used by traditional systems. Furthermore, multiple GB300 superchips and HGX B200/B300 are connected via 1800 GB/s NVLink vs. orders of magnitude slower network or PCIe connections used by traditional systems. Since these are the main bottlenecks, GH200/GB300's high-speed connections directly translate to much higher performance compared to traditional architectures. Also, multiple NV-linked (G)B200 or (G)B300 act as a single giant GPU with one single giant coherent memory pool. Since even PCIe gen 6 is much slower, Nvidia does not offer B200 and B300 as PCIe cards anymore (only as SXM or superchip). We highly recommend choosing NV-linked systems over systems connected via PCIe and/or network. Another key distinction from alternative systems is high-bandwidth memory (HBM). Since memory bandwidth strongly affects inference performance, HBM is essential.What is the difference to 19-inch server models?Form factor: 19-inch servers have a very distinct form factor. They are of low height and are very long, e.g. 438 x 87.5 x 900mm (17.24" x 3.44" x 35.43"). This makes them rather unsuitable to place them anywhere else than in a 19-inch rack. Our GH200 and GB300 tower models have desktop form factors: 244 x 567 x 523 mm (20.6 x 9.6 x 22.3") or 255 x 565 x 530 mm (20.9 x 10 x 22.2") or 531 x 261 x 544 mm (20.9 x 10.3 x 21.4") or 252 x 692 x 650 mm (9.9 x 27.2 x 25.6"). This makes it possible to place them almost anywhere.Noise: 19-inch servers are extremely loud. The average noise level is typically around 90 decibels, which is as loud as a subway train and exceeds the noise level that is considered safe for workers subject to long-term exposure. In contrast, our GH200 and GB300 tower models are very quiet (factory setting is 25 decibels) and they can easily be adjusted to even lower or higher noise levels because each fan can be tuned individually and manually from 0 to 100% PWM duty cycle. Efficient cooling is ensured, because our GH200 and GB300 tower models have a higher number of fans and the low-revving Noctua fans have a much bigger diameter compared to their 19-inch counterparts and move approximately the same amount or even a much higher amount of air depending on the specific configuration and PWM tuning.Transportability: 19-inch servers are not meant to be transported, consequently, they lack every feature in this regard. In addition, their form factor makes them rather unsuitable to be transported. Our GH200 and GB300 tower models, in contrast, can be transported very easily. Our metal and mini cases even feature two handles, which makes moving them around very easy.Infrastructure: 19-inch servers typically need quite some infrastructure to be able to be deployed. At the very least, a 19-inch mounting rack is definitely required. Our GH200 and GB300 models do not need any special infrastructure at all. They can be deployed quickly and easily almost everywhere.Latency: 19-inch servers are typically accessed via network. Because of this, there is always at least some latency. Our GH200 and GB300 tower models can be used as desktops/workstations. In this use case, latency is virtually non-existent. Looks: 19-inch server models are not particularly aesthetically pleasing. In contrast, our available case options are, in our humble opinion, by far the most beautiful there are.Technical details of our GH200 workstations (base configuration)Metal tower with two color choices: Titan grey and Champagne goldMetal tower with two color choices: Silver and BlackGlass tower with four color choices: white, black, green or turquoiseAvailable air or liquid-cooled1x Nvidia GH200 Grace Hopper Superchip1x 72-core Nvidia Grace CPU1x Nvidia Hopper H100 Tensor Core GPU (on request)1x Nvidia Hopper H200 Tensor Core GPU480GB of LPDDR5X memory with error-correction code (ECC)96GB of HBM3 (available on request) or 144GB of HBM3e memory per superchip576GB (available on request), 624GB of total fast-access memoryNVLink-C2C: 900 GB/s of bandwidthProgrammable from 450W to 1000W TDP (CPU + GPU + memory)2x High-efficiency 2000W PSU2x PCIe gen4/5 M.2 slots on board2x/4x PCIe gen4/5 drive (NVMe)2x/3x FHFL PCIe Gen5 x161x/2x USB 3.0/3.2 ports2x RJ45 10GbE ports1x RJ45 IPMI port1x Mini display portHalogen-free LSZH power cablesStainless steel boltsVery quiet, the factory setting is 25 decibels (fan speed and thus noise level can be individually and manually configured from 0 to 100% PWM duty cycle)3 years manufacturer's warranty244 x 567 x 523 mm (20.6 x 9.6 x 22.3") or 255 x 565 x 530 mm (20.9 x 10 x 22.2") or 531 x 261 x 544 mm (20.9 x 10.3 x 21.4") or 252 x 692 x 650 mm (9.9 x 27.2 x 25.6")30 kg (66 lbs) or 33 kg (73 lbs)Optional componentsLiquid cooling (highly recommended)Bigger custom air-cooled heatsinkNIC Nvidia Bluefield-3NIC Nvidia ConnectX-7/8NIC Intel 100GWLAN + Bluetooth cardUp to 2x 8TB M.2 SSDUp to 2x 256TB 2.5" SSDStorage controllerRaid controllerAdditional USB portsMulti-display graphics cardSound cardMouseKeyboardConsumer or industrial fansIntrusion detectionOS preinstalledAnything possible on request

Need something different? We are happy to build custom systems to your liking.

Compute performance of one GH20067 teraFLOPS FP641 petaFLOPS TF322 petaFLOPS FP164 petaFLOPS FP8BenchmarksGB10 vs. GH200: https://www.phoronix.com/review/nvidia-gb10-gh200Llama.cpp on GH200: https://github.com/ggml-org/llama.cpp/discussions/18005GH200 FLOPs: https://github.com/mag-/gpu_benchmarkGB300 Blackwell Ultra

DGX Station GB300 Blackwell Ultra 775GB is available now. Be one of the first in the world to get a GB300 desktop/workstation. Order now!  Compute performance of one GB3003 teraFLOPS FP645 petaFLOPS TF3210 petaFLOPS FP1620 petaFLOPS FP840 petaFLOPS FP4The Grace CPU

Compute performance of one GB3003 teraFLOPS FP645 petaFLOPS TF3210 petaFLOPS FP1620 petaFLOPS FP840 petaFLOPS FP4The Grace CPU

The Nvidia Grace CPU delivers twice the performance per watt of conventional x86-64 platforms and is currently the world’s fastest ARM CPU. Grace-Grace superchip workstations are available on request.Benchmarks

https://www.phoronix.com/review/nvidia-gh200-gptshop-benchmarkhttps://www.phoronix.com/review/nvidia-gh200-amd-threadripperhttps://www.phoronix.com/review/aarch64-64k-kernel-perfhttps://www.phoronix.com/review/nvidia-gh200-compilershttps://www.phoronix.com/review/nvidia-grace-epyc-turinDownload

Here you can find various downloads concerning our systems: operating systems, firmware, drivers, software, manuals, white papers, spec sheets and so on. Everything you need to run your system and more.

Spec sheetsGH200 624GB: Spec sheet GH200 624GB.pdfGH200 Glass 624GB: Spec sheet GH200 Glass 624GB.pdfGH200 Master 624GB: Spec sheet GH200 Master 624GB.pdfGB300 775GB: Spec sheet GB300 775GB.pdfGB300 Glass 775GB: Spec sheet GB300 Glass 775GB.pdfGB300 Master 775GB: Spec sheet GB300 Master 775GB.pdfGB200 NVL4 1.8TB: Spec sheet GB200 NVL4 1.8TB.pdfMi355X 2.3TB: Spec sheet Mi355X 2.3TB.pdfB200 1.5TB: Spec sheet B200 1.5TB.pdfB300 2.3TB: Spec sheet B300 2.3TB.pdf

ManualsOfficial Nvidia GH200 Manual: https://docs.nvidia.com/grace/#grace-hopperOfficial Nvidia B200 Manual: https://docs.nvidia.com/dgx/dgxb200-service-manual/dgxb200-service-manual.pdfOfficial Nvidia Grace Manual: https://docs.nvidia.com/grace/#grace-cpuOfficial Nvidia Grace getting started: https://docs.nvidia.com/grace/#getting-started-with-nvidia-graceHow to speed up llama.cpp on unified memory: https://github.com/ggml-org/llama.cpp/discussions/18005GPTshop.ai GH200 Manual: Manual GH200.pdf

Operating systemsUbuntu Server for ARM: https://cdimage.ubuntu.com/releases/24.04/release/ubuntu-24.04.3-live-server-arm64+largemem.iso

Using the newest Nvidia 64k kernel is highly recommended: https://packages.ubuntu.com/search?keywords=linux-nvidia-64k-hwe

Ubuntu Server for x86: https://releases.ubuntu.com/24.04.3/ubuntu-24.04.3-live-server-amd64.iso

DriversNvidia drivers (also work for RTX Pro 4000 and RTX Pro 6000): https://www.nvidia.com/Download/index.aspx?lang=en-us

Select product type "data center", product series "HGX-Series" and operating system "Linux aarch64".Aspeed drivers: https://aspeedtech.com/support_driver/Nvidia Bluefield-3 drivers: https://developer.nvidia.com/networking/doca#downloadsNvidia ConnectX drivers: https://network.nvidia.com/products/ethernet-drivers/linux/mlnx_en/Intel E810-CQDA2 drivers: https://www.intel.com/content/www/us/en/download/19630/intel-network-adapter-driver-for-e810-series-devices-under-linux.html?wapkw=E810-CQDA2HighPoint Rocket 1628A drivers: https://www.highpoint-tech.com/gen5-software-driversAdaptec SmartRAID Ultra 4308P-32a drivers: https://www.microchip.com/en-us/adaptec

FirmwareGH200 BMC: GH200 BMC.zipGH200 BIOS: GH200 BIOS.zipNvidia Bluefield-3 firmware: https://network.nvidia.com/support/firmware/bluefield3/Nvidia ConnectX firmware: https://network.nvidia.com/support/firmware/firmware-downloads/Intel E810-CQDA2 firmware: https://www.intel.com/content/www/us/en/search.html?ws=idsa-default#q=E810-CQDA2HighPoint Rocket 1628A firmware: https://www.highpoint-tech.com/gen5-software-driversAdaptec SmartRAID Ultra 4308P-32a firmware: https://www.microchip.com/en-us/adaptec

White papersNvidia GH200 Grace-Hopper white paperNvidia Blackwell white paperDeveloping for Nvidia superchipsThe ultimate guide to fine-tuningDiffusion LLMsAbsolute ZeroMatFormerPuzzle - Inference-optimized LLMsSelf-Adapting Language ModelsApexMixture-of-RecursionsFlexolmoCoconutHierarchical Reasoning ModelR-ZeroNVFP4Recursive ReasoningDiffusion without AutoencoderContinuous Autoregressive ModelsInterleaved ReasoningWeight subspace hypothesisManifold-Constrained Hyper-ConnectionsDeepseekAristotleRecursive Language Models

Top open-source LLMsHuggingface: https://huggingface.co/modelsModelscope: https://modelscope.cn/modelsZAI GLM-4.7 358B: https://huggingface.co/zai-org/GLM-4.7DeepSeek V3.2 Speciale 685B: https://huggingface.co/deepseek-ai/DeepSeek-V3.2-SpecialeMoonshot AI Kimi K2.5 1T Thinking: https://huggingface.co/moonshotai/Kimi-K2.5DeepSeek Math V2: https://huggingface.co/deepseek-ai/DeepSeek-Math-V2Xiaomi MiMo V2 Flash 310B: https://huggingface.co/XiaomiMiMo/MiMo-V2-FlashMiniMax M2.1 229B: https://huggingface.co/MiniMaxAI/MiniMax-M2.1Baidu ERNIE 4.5 VL 28B-A3B Thinking: https://huggingface.co/baidu/ERNIE-4.5-VL-28B-A3B-ThinkingDeepseek V3.2 Experimental 685B: https://huggingface.co/deepseek-ai/DeepSeek-V3.2-ExpMistral Large 3 675B 2512: https://huggingface.co/mistralai/Mistral-Large-3-675B-Instruct-2512LG K-EXAONE 236B A23B: https://huggingface.co/LGAI-EXAONE/K-EXAONE-236B-A23BMultiverse Computing HyperNova 60B: https://huggingface.co/MultiverseComputingCAI/HyperNova-60BIQuestlab IQuest-Coder 40B: https://iquestlab.github.io/A.X K1 519B: https://huggingface.co/skt/A.X-K1Deepseek V3.1 Terminus 685B: https://huggingface.co/deepseek-ai/DeepSeek-V3.1-TerminusZAI GLM 4.6 357B: https://huggingface.co/zai-org/GLM-4.6Inclusion AI Ring 1T: https://huggingface.co/inclusionAI/Ring-1TInclusion AI Ling 1T: https://huggingface.co/inclusionAI/Ling-1TQwen 3 Omni 30B-A3B: https://github.com/QwenLM/Qwen3-OmniQwen 3 VL 235B-A2B: https://github.com/QwenLM/Qwen3-VLINTELLECT-3 106BA12B: https://huggingface.co/PrimeIntellect/INTELLECT-3HunyuanOCR 1B: https://github.com/Tencent-Hunyuan/HunyuanOCRNemotron Orchestrator 8B: https://huggingface.co/nvidia/Nemotron-Orchestrator-8BOvis Image 7B: https://huggingface.co/AIDC-AI/Ovis-Image-7BTrinity Mini 26B3A: https://huggingface.co/arcee-ai/Trinity-MiniHermes 4.3 36B: https://huggingface.co/NousResearch/Hermes-4.3-36BMistral Large 3 675B Instruct 2512: https://huggingface.co/mistralai/Mistral-Large-3-675B-Instruct-2512Olmo 3.1 32B Think: https://huggingface.co/allenai/Olmo-3.1-32B-ThinkGLM 4.6V 108B: https://huggingface.co/zai-org/GLM-4.6VNemotron 3 Nano 30B A3B: https://huggingface.co/nvidia/NVIDIA-Nemotron-3-Nano-30B-A3B-BF16Solar Open 102B: https://huggingface.co/upstage/Solar-Open-100BServicenow Apriel 1.5 15B Thinker: https://huggingface.co/ServiceNow-AI/Apriel-1.5-15b-ThinkerKwaipilot KAT Dev 72B Experimental: https://huggingface.co/Kwaipilot/KAT-DevMinimax M2 229B: https://huggingface.co/MiniMaxAI/MiniMax-M2Moonshot Kimi Linear 48B-A3B: https://huggingface.co/moonshotai/Kimi-Linear-48B-A3B-InstructWeibo AI VibeThinker 1.5B: https://huggingface.co/WeiboAI/VibeThinker-1.5BDeepseek R1 0528 685B: https://huggingface.co/deepseek-ai/DeepSeek-R1-0528Qwen3 Coder 480B A35B: https://github.com/QwenLM/Qwen3-CoderQwen3-235B-A22B 2507: https://github.com/QwenLM/Qwen3HunyuanWorld-1.0: https://github.com/Tencent-Hunyuan/HunyuanWorld-1.0GLM 4.5 355B-A32B: https://github.com/zai-org/GLM-4.5OpenAI GPT OSS 120B: https://github.com/openai/gpt-ossNvidia Llama Nemotron Super 49B and Ultra 253B: https://www.nvidia.com/en-us/ai-data-science/foundation-models/llama-nemotron/Dots.llm1 142B: https://github.com/rednote-hilab/dots.llm1QwQ-32B: https://qwenlm.github.io/blog/qwq-32b/Llama 3.1, 3.2, 3.3 and 4: https://www.llama.com/Llama 4 Maverick 400B: https://huggingface.co/meta-llama/Llama-4-Maverick-17B-128E-InstructLlama 4 Scout 109B: https://huggingface.co/meta-llama/Llama-4-Scout-17B-16E-InstructCogito v1 preview 70B: https://huggingface.co/deepcogito/cogito-v1-preview-qwen-32BGLM-4-32B: https://github.com/THUDM/GLM-4Mistral Large 2 123B: https://huggingface.co/mistralai/Mistral-Large-Instruct-2407Pixtral Large 123B: https://mistral.ai/news/pixtral-large/Llama-3.2 Vision 90B: https://huggingface.co/meta-llama/Llama-3.2-90B-VisionLlama-3.1 405B: https://huggingface.co/meta-llama/Llama-3.1-405BDeepseek V3.1 685B: https://huggingface.co/deepseek-ai/DeepSeek-V3.1-BaseMiniMax-01 456B: https://www.minimaxi.com/en/news/minimax-01-series-2Tülu 3 405B: https://allenai.org/tuluQwen2.5 VL 72B: https://huggingface.co/Qwen/Qwen2.5-VL-72B-InstructAya Vision: https://cohere.com/blog/aya-visionGemma-3 27B: https://blog.google/technology/developers/gemma-3/Mistral Small 3.1 24B: https://mistral.ai/news/mistral-small-3-1EXAONE Deep 32B: https://github.com/LG-AI-EXAONE/EXAONE-DeepSkywork-R1V 38B: https://github.com/SkyworkAI/Skywork-R1VPhi-4 models: https://azure.microsoft.com/en-us/blog/one-year-of-phi-small-language-models-making-big-leaps-in-ai/Seed1.5-VL: https://seed.bytedance.com/en/tech/seed1_5_vlBAGEL 14B: https://bagel-ai.org/UniVG-R1 8B: https://amap-ml.github.io/UniVG-R1-page/Magistral-Small-2506 24B: https://huggingface.co/mistralai/Magistral-Small-2506MiniMax-M1: https://github.com/MiniMax-AI/MiniMax-M1Tencent Hunyuan-A52B: https://github.com/Tencent-Hunyuan/Tencent-Hunyuan-LargeTencent Hunyuan-A13B: https://github.com/Tencent-Hunyuan/Hunyuan-A13BBaidu ERNIE 4.5: https://huggingface.co/collections/baidu/ernie-45-6861cd4c9be84540645f35c9Ai2 OLMo 2: https://allenai.org/olmoPangu-Pro-MOE-72B: https://huggingface.co/IntervitensInc/pangu-pro-moe-modelKimi K2 0905 1T: https://huggingface.co/moonshotai/Kimi-K2-Instruct-0905ByteDance Seed OSS 36B: https://huggingface.co/ByteDance-Seed/Seed-OSS-36B-InstructGrok 2 270B: https://huggingface.co/xai-org/grok-2Command A Reasoning 111B: https://huggingface.co/CohereLabs/command-a-reasoning-08-2025LongCat-Flash 560B: https://github.com/meituan-longcat/LongCat-Flash-ChatK2-Think 32B: https://huggingface.co/LLM360/K2-ThinkOlmo 3 32B Think: https://huggingface.co/allenai/Olmo-3-32B-ThinkUni-MoE-2.0-Omni: https://idealistxy.github.io/Uni-MoE-v2.github.io/LLaDA 2.0 flash: https://huggingface.co/inclusionAI/LLaDA2.0-flashNousCoder 14B: https://huggingface.co/NousResearch/NousCoder-14BHyperCLOVA X SEED 32B Think: https://huggingface.co/naver-hyperclovax/HyperCLOVAX-SEED-Think-32BGLM 4.7 Flash 30B-A3B: https://huggingface.co/zai-org/GLM-4.7-FlashNvidia Alpamayo R1 10B: https://huggingface.co/nvidia/Alpamayo-R1-10B

SoftwareNvidia Dynamo: https://www.nvidia.com/en-us/ai/dynamo/Nvidia Github: https://github.com/NVIDIANvidia CUDA: https://developer.nvidia.com/cuda-downloads?target_os=Linux&target_arch=arm64-sbsaNvidia Container-toolkit: https://github.com/NVIDIA/nvidia-container-toolkitNvidia Tensorflow: https://github.com/NVIDIA/tensorflowNvidia Pytorch: https://catalog.ngc.nvidia.com/orgs/nvidia/containers/pytorchKeras: https://keras.io/Apache OpenNLP: https://opennlp.apache.org/Nvidia NIM models: https://build.nvidia.com/explore/discoverNvidia Triton inference server: https://www.nvidia.com/en-us/ai-data-science/products/triton-inference-server/Nvidia NeMo Customizer: https://developer.nvidia.com/blog/fine-tune-and-align-llms-easily-with-nvidia-nemo-customizer/Huggingface text generation inference: https://github.com/huggingface/text-generation-inferencevLLM - inference and serving engine: https://github.com/vllm-project/vllmvLLM docker image: https://hub.docker.com/r/drikster80/vllm-gh200-openaiOllama - run LLMs locally: https://ollama.com/Open WebUI: https://openwebui.com/Llama.cpp: https://github.com/ggml-org/llama.cppComfyUI: https://www.comfy.org/LM Studio: https://lmstudio.ai/Llamafile: https://github.com/Mozilla-Ocho/llamafileFine-tune Llama 3 with PyTorch FSDP and Q-Lora: https://www.philschmid.de/fsdp-qlora-llama3/Perplexica: https://github.com/ItzCrazyKns/PerplexicaMorphic: https://github.com/miurla/morphicOpen-Sora: https://github.com/hpcaitech/Open-SoraFlux.1: https://github.com/black-forest-labs/fluxStorm: https://github.com/stanford-oval/stormStable Diffusion 3.5: https://huggingface.co/stabilityai/stable-diffusion-3.5-largeGenmo Mochi1: https://github.com/genmoai/modelsGenmo Mochi1 (reduced VRAM): https://github.com/victorchall/genmoai-smolRhymes AI Allegro: https://github.com/rhymes-ai/AllegroOmniGen: https://github.com/VectorSpaceLab/OmniGenSegment anything: https://github.com/facebookresearch/segment-anythingAutoVFX: https://haoyuhsu.github.io/autovfx-website/DimensionX: https://chenshuo20.github.io/DimensionX/Nvidia Add-it: https://research.nvidia.com/labs/par/addit/MagicQuill: https://magicquill.art/demo/AnythingLLM: https://github.com/Mintplex-Labs/anything-llmPyramid-Flow: https://pyramid-flow.github.io/LTX-Video: https://github.com/Lightricks/LTX-VideoCogVideoX: https://github.com/THUDM/CogVideoOmniControl: https://github.com/Yuanshi9815/OminiControlSamurai: https://yangchris11.github.io/samurai/All Hands: https://www.all-hands.dev/Tencent HunyuanVideo: https://aivideo.hunyuan.tencent.com/Aider: https://aider.chat/Unsloth: https://github.com/unslothai/unslothAxolotl: https://github.com/axolotl-ai-cloud/axolotlStar: https://nju-pcalab.github.io/projects/STAR/Sana: https://nvlabs.github.io/Sana/RepVideo: https://vchitect.github.io/RepVid-Webpage/UI-TARS: https://github.com/bytedance/UI-TARSDiffuEraser: https://lixiaowen-xw.github.io/DiffuEraser-page/Go-with-the-Flow: https://eyeline-research.github.io/Go-with-the-Flow/3DTrajMaster: https://fuxiao0719.github.io/projects/3dtrajmaster/YuE: https://map-yue.github.io/DynVFX: https://dynvfx.github.io/ReasonerAgent: https://reasoner-agent.maitrix.org/Open-source DeepResearch: https://huggingface.co/blog/open-deep-researchDeepscaler: https://github.com/agentica-project/deepscalerInspireMusic: https://funaudiollm.github.io/inspiremusic/FlashVideo: https://github.com/FoundationVision/FlashVideoMatAnyone: https://pq-yang.github.io/projects/MatAnyone/LocalAI: https://localai.io/Stepvideo: https://huggingface.co/stepfun-ai/stepvideo-t2vSkyReels: https://github.com/SkyworkAI/SkyReels-V2OctoTools: https://octotools.github.io/SynCD: https://www.cs.cmu.edu/~syncd-project/Mobius: https://mobius-diffusion.github.io/Wan2.2: https://wan.video/TheoremExplainAgent: https://tiger-ai-lab.github.io/TheoremExplainAgent/RIFLEx: https://riflex-video.github.io/Browser use: https://browser-use.com/HunyuanVideo-I2V: https://github.com/Tencent/HunyuanVideo-I2VSpark-TTS: https://sparkaudio.github.io/spark-tts/GEN3C: https://research.nvidia.com/labs/toronto-ai/GEN3C/DiffRhythm: https://aslp-lab.github.io/DiffRhythm.github.io/Babel: https://babel-llm.github.io/babel-llm/Diffusion Self-Distillation: https://primecai.github.io/dsd/OWL: https://github.com/camel-ai/owlANUS: https://github.com/nikmcfly/ANUSLong Context Tuning for Video Generation: https://guoyww.github.io/projects/long-context-video/Tight Inversion: https://tight-inversion.github.io/VACE: https://ali-vilab.github.io/VACE-Page/SANA-Sprint: https://nvlabs.github.io/Sana/Sprint/Sesame Conversational Speech Model: https://github.com/SesameAILabs/csmSearch-R1: https://github.com/PeterGriffinJin/Search-R1AI Scientist: https://github.com/SakanaAI/AI-ScientistSpatialLM: https://manycore-research.github.io/SpatialLM/Nvidia Cosmos: https://www.nvidia.com/en-us/ai/cosmos/AudioX: https://zeyuet.github.io/AudioX/AccVideo: https://aejion.github.io/accvideo/Video-T1: https://liuff19.github.io/Video-T1/InfiniteYou: https://bytedance.github.io/InfiniteYou/BizGen: https://bizgen-msra.github.io/ParetoQ: https://github.com/facebookresearch/ParetoQDAPO: https://dapo-sia.github.io/OpenDeepSearch: https://github.com/sentient-agi/OpenDeepSearchKTransformers: https://github.com/kvcache-ai/ktransformersSkyReels-A2: https://skyworkai.github.io/skyreels-a2.github.io/Human Skeleton and Mesh Recovery: https://isshikihugh.github.io/HSMR/Segment Any Motion in Videos: https://motion-seg.github.io/Lumina-mGPT 2.0: https://github.com/Alpha-VLLM/Lumina-mGPT-2.0HiDream-I1: https://github.com/HiDream-ai/HiDream-I1HiDream-E1: https://github.com/HiDream-ai/HiDream-E1Transformer Lab: https://transformerlab.ai/One-Minute Video Generationwith Test-Time Training: https://test-time-training.github.io/video-dit/OmniTalker: https://humanaigc.github.io/omnitalker/UNO: https://bytedance.github.io/UNO/Skywork-OR1: https://github.com/SkyworkAI/Skywork-OR1LettuceDetect: https://github.com/KRLabsOrg/LettuceDetectSonic: https://jixiaozhong.github.io/Sonic/InstantCharacter: https://instantcharacter.github.io/Dive: https://github.com/OpenAgentPlatform/DiveNari Dia-1.6B: https://github.com/nari-labs/diaFramePack: https://github.com/lllyasviel/FramePackRAGEN: https://github.com/RAGEN-AI/RAGENLiveCC: https://showlab.github.io/livecc/Reflection2perfection: https://diffusion-cot.github.io/reflection2perfection/UNI3C: https://ewrfcas.github.io/Uni3C/MAGI-1: https://github.com/SandAI-org/Magi-1Parakeet TDT 0.6B V2: https://huggingface.co/nvidia/parakeet-tdt-0.6b-v2VRAM Calculator: https://apxml.com/tools/vram-calculatorICEdit: https://river-zhang.github.io/ICEdit-gh-pages/FantasyTalking: https://fantasy-amap.github.io/fantasy-talking/3DV-TON: https://2y7c3.github.io/3DV-TON/WebThinker: https://github.com/RUC-NLPIR/WebThinkerSGLang: https://github.com/sgl-project/sglangJan: https://jan.ai/Rasa: https://rasa.com/ACE-Step: https://ace-step.github.io/AIQToolkit: https://github.com/NVIDIA/AIQToolkitZeroSearch: https://alibaba-nlp.github.io/ZeroSearch/DreamO: https://github.com/bytedance/DreamOHoloTime: https://zhouhyocean.github.io/holotime/FlexiAct: https://shiyi-zh0408.github.io/projectpages/FlexiAct/HunyuanCustom: https://hunyuancustom.github.io/PixelHacker: https://hustvl.github.io/PixelHacker/ZenCtrl: https://fotographer.ai/zenctrlT2I-R1: https://github.com/CaraJ7/T2I-R1TFrameX: https://github.com/TesslateAI/TFrameXTesslate Studio: https://github.com/TesslateAI/StudioContinuous Thought Machines: https://github.com/SakanaAI/continuous-thought-machinesNVIDIA Isaac GR00T: https://github.com/NVIDIA/Isaac-GR00TBLIP3-o: https://github.com/JiuhaiChen/BLIP3oDeerFlow: https://github.com/bytedance/deer-flowClara: https://github.com/badboysm890/ClaraVerseVoid: https://voideditor.com/LLM-d: https://github.com/llm-d/llm-dRoocode: https://roocode.com/MCP Filesystem Server: https://github.com/mark3labs/mcp-filesystem-serverSurfsense: https://github.com/MODSetter/SurfSenseS3: https://github.com/pat-jj/s3Ramalama: https://ramalama.ai/FLUX.1 Kontext: https://bfl.ai/models/flux-kontextExLlamaV2: https://github.com/turboderp-org/exllamav2MLC LLM: https://github.com/mlc-ai/mlc-llmLMDeploy: https://github.com/InternLM/lmdeployHunyuanVideo-Avatar: https://hunyuanvideo-avatar.github.io/OmniConsistency: https://github.com/showlab/OmniConsistencyPhantom: https://github.com/Phantom-video/PhantomChatterbox TTS: https://github.com/resemble-ai/chatterboxGemini Fullstack LangGraph: https://github.com/google-gemini/gemini-fullstack-langgraph-quickstartRagbits: https://github.com/deepsense-ai/ragbitsSparse transformers: https://github.com/NimbleEdge/sparse_transformersTokasaurus: https://github.com/ScalingIntelligence/tokasaurusDeepVerse: https://sotamak1r.github.io/deepverse/SkyReels-Audio: https://skyworkai.github.io/skyreels-audio.github.io/HunyuanCustom: https://hunyuancustom.github.io/OpenAudio: https://github.com/fishaudioKVzip: https://github.com/snu-mllab/KVzipHcompany: https://www.hcompany.ai/Text-to-LoRA: https://github.com/SakanaAI/text-to-loraRvn-tools: https://github.com/rvnllm/rvn-toolsTransformer Lab: https://github.com/transformerlab/transformerlab-appLlama.cpp Server Launcher: https://github.com/thad0ctor/llama-server-launcherPlayerOne: https://playerone-hku.github.io/SeedVR2: https://iceclear.github.io/projects/seedvr2/Any-to-Bokeh: https://vivocameraresearch.github.io/any2bokeh/LayerFlow: https://sihuiji.github.io/LayerFlow-Page/ImmerseGen: https://immersegen.github.io/InterActHuman: https://zhenzhiwang.github.io/interacthuman/LoRA-Edit: https://cjeen.github.io/LoraEditPaper/LMCache: https://github.com/LMCache/LMCachePolaris: https://github.com/ChenxinAn-fdu/POLARISLLM Visualization: https://bbycroft.net/llmOmniGen2: https://github.com/VectorSpaceLab/OmniGen2FLUX.1 Kontext [dev]: https://huggingface.co/black-forest-labs/FLUX.1-Kontext-devVMem: https://v-mem.github.io/SongBloom: https://github.com/Cypress-Yang/SongBloomHunyuan-GameCraft: https://hunyuan-gamecraft.github.io/Unmute: https://github.com/kyutai-labs/unmuteEX-4D: https://tau-yihouxiang.github.io/projects/EX-4D/EX-4D.htmlXVerse: https://github.com/bytedance/XVerseMemOS: https://memos.openmem.net/Opencode: https://github.com/sst/opencodeStreamDiT: https://cumulo-autumn.github.io/StreamDiT/ThinkSound: https://thinksound-project.github.io/OmniVCus: https://caiyuanhao1998.github.io/project/OmniVCus/Universal Tool Calling Protocol (UTCP): https://github.com/universal-tool-calling-protocolAgent Reinforcement Trainer: https://github.com/OpenPipe/ARTMeiGen MultiTalk: https://meigen-ai.github.io/multi-talk/ik_llama.cpp: https://github.com/ikawrakow/ik_llama.cppNeuralOS: https://neural-os.com/System prompts: https://github.com/x1xhlol/system-prompts-and-models-of-ai-toolsGPUstack: https://github.com/gpustack/gpustackRust GPU: https://rust-gpu.github.io/Hierarchical Reasoning Model: https://github.com/sapientinc/HRMHaystack: https://github.com/deepset-ai/haystackSeC: https://rookiexiong7.github.io/projects/SeC/ObjectClear: https://github.com/zjx0101/ObjectClearAlphaGo Moment: https://github.com/GAIR-NLP/ASI-ArchX-Omni: https://x-omni-team.github.io/Qwen-Image: https://huggingface.co/Qwen/Qwen-ImageStand-In: https://www.stand-in.tech/Yan: https://greatx3.github.io/Yan/OpenCUA: https://opencua.xlang.ai/InfiniteTalk: https://github.com/MeiGen-AI/InfiniteTalkRynnEC: https://github.com/alibaba-damo-academy/RynnECTINKER: https://aim-uofa.github.io/Tinker/CoMPaSS: https://compass.blurgy.xyz/OmniHuman-1.5: https://omnihuman-lab.github.io/v1_5/HunyuanVideo-Foley: https://szczesnys.github.io/hunyuanvideo-foley/Wan-S2V: https://humanaigc.github.io/wan-s2v-webpage/HunyuanWorld-Voyager: https://github.com/Tencent-Hunyuan/HunyuanWorld-VoyagerHunyuanImage-2.1: https://huggingface.co/tencent/HunyuanImage-2.1NVIDIA NeMo Agent toolkit: https://github.com/NVIDIA/NeMo-Agent-ToolkitWan-Animate: https://humanaigc.github.io/wan-animate/Lucy Edit: https://github.com/DecartAI/Lucy-Edit-ComfyUI/Tencent SRPO: https://github.com/Tencent-Hunyuan/SRPOUMO: https://bytedance.github.io/UMO/Tongyi DeepResearch: https://tongyi-agent.github.io/blog/introducing-tongyi-deep-research/Lynx: https://byteaigc.github.io/Lynx/HunyuanImage-3.0: https://huggingface.co/tencent/HunyuanImage-3.0DIffbot LLM Inference Server: https://github.com/diffbot/diffbot-llm-inferenceDitto: https://github.com/EzioBy/DittoSurfSense: https://github.com/MODSetter/SurfSenseKilocode: https://github.com/Kilo-Org/kilocodeTOON Converter: https://www.toonllm.dev/OrKa: https://github.com/marcosomma/orka-reasoningQwen Image Edit 2509: https://huggingface.co/Qwen/Qwen-Image-Edit-2509FLUX.1 Krea dev: https://huggingface.co/black-forest-labs/FLUX.1-Krea-devSkywork UniPic: https://github.com/SkyworkAI/UniPicMatrix Game 2.0: https://matrix-game-v2.github.io/Matrix 3D: https://matrix-3d.github.io/Voost: https://nxnai.github.io/Voost/WinT3R: https://lizizun.github.io/WinT3R.github.io/LuxDiT: https://research.nvidia.com/labs/toronto-ai/LuxDiT/IndexTTS2: https://github.com/index-tts/index-ttsFireRedTTS-2: https://github.com/FireRedTeam/FireRedTTS2VoxCPM: https://github.com/OpenBMB/VoxCPM/OmniInsert: https://phantom-video.github.io/OmniInsert/Wan Alpha: https://github.com/WeChatCV/Wan-AlphaOvi: https://github.com/character-ai/OviOmniRetarget: https://omniretarget.github.io/Lumina-DiMOO: https://synbol.github.io/Lumina-DiMOO/Paper2Video: https://showlab.github.io/Paper2Video/ChronoEdit: https://research.nvidia.com/labs/toronto-ai/chronoedit/StreamingVLM: https://github.com/mit-han-lab/streaming-vlmDreamOmni2: https://pbihao.github.io/projects/DreamOmni2/index.htmlHunyuanWorld Mirror: https://huggingface.co/tencent/HunyuanWorld-MirrorHoloCine: https://holo-cine.github.io/Krea Realtime 14B: https://huggingface.co/krea/krea-realtime-videoUltraGen: https://sjtuplayer.github.io/projects/UltraGen/Stable Video Infinity: https://github.com/vita-epfl/Stable-Video-InfinityDeepSeek OCR: https://github.com/deepseek-ai/DeepSeek-OCRLongCat Video: https://meituan-longcat.github.io/LongCat-Video/MoCha: https://orange-3dv-team.github.io/MoCha/GRAG: https://little-misfit.github.io/GRAG-Image-Editing/BindWeave: https://lzy-dot.github.io/BindWeave/UniLumos: https://github.com/alibaba-damo-academy/Lumos-CustomBrain-IT: https://amitzalcher.github.io/Brain-IT/OlmoEarth: https://allenai.org/blog/olmoearth-modelsMotionStream: https://joonghyuk.com/motionstream-web/InfinityStar: https://github.com/FoundationVision/InfinityStarMaya-Research Maya 1: https://huggingface.co/maya-research/maya1Step Audio EditX: https://github.com/stepfun-ai/Step-Audio-EditXEVTAR: https://github.com/360CVGroup/RefVTONMiroThinker: https://huggingface.co/miromind-ai/MiroThinker-v1.5-235BHunyuanVideo-1.5: https://huggingface.co/tencent/HunyuanVideo-1.5Segment Anything Model 3: https://ai.meta.com/sam3/Depth Anything 3: https://depth-anything-3.github.io/Kandinsky: https://kandinskylab.ai/Proactive Hearing Assistant: https://proactivehearing.cs.washington.edu/DR Tulu: https://github.com/rlresearch/dr-tuluFLUX 2 dev: https://huggingface.co/black-forest-labs/FLUX.2-devZ Image: https://huggingface.co/Tongyi-MAI/Z-ImageOpenCode: https://opencode.ai/Crush: https://github.com/charmbracelet/crushGeoVista: https://ekonwang.github.io/geo-vista/RynnVLA-002: https://github.com/alibaba-damo-academy/RynnVLA-002iMontage: https://kr1sjfu.github.io/iMontage-web/Heretic: https://github.com/p-e-w/hereticPydantic: https://github.com/pydantic/pydantic-aiNitroGen: https://huggingface.co/nvidia/NitroGenHY-World 1.5: https://huggingface.co/tencent/HY-WorldPlayQwen Image Edit 2511: https://huggingface.co/Qwen/Qwen-Image-Edit-2511FlashPortrait: https://francis-rings.github.io/FlashPortrait/Generative Refocusing: https://generative-refocusing.github.io/StoryMem: https://kevin-thu.github.io/StoryMem/ReCo: https://zhw-zhang.github.io/ReCo-page/Spatia: https://zhaojingjing713.github.io/Spatia/DreaMontage: https://dreamontage.github.io/DreaMontage/Flyte: https://github.com/flyteorg/flyteMetaflow: https://github.com/Netflix/metaflowKedro: https://github.com/kedro-org/kedroLangfuse: https://github.com/langfuse/langfuseEvidently: https://github.com/evidentlyai/evidentlyQwen-Image-2512: https://huggingface.co/Qwen/Qwen-Image-2512Stream-DiffVSR: https://jamichss.github.io/stream-diffvsr-project-page/Yume 1.5: https://stdstu12.github.io/YUME-Project/SpotEdit: https://biangbiang0321.github.io/SpotEdit.github.io/HY-Motion 1.0: https://github.com/Tencent-Hunyuan/HY-Motion-1.0ProEdit: https://isee-laboratory.github.io/ProEdit/JavisGPT: https://javisverse.github.io/JavisGPT-page/SpaceTimePilot: https://zheninghuang.github.io/Space-Time-Pilot/Lightricks LTX-2: https://huggingface.co/Lightricks/LTX-2GLM Image: https://huggingface.co/zai-org/GLM-ImageBurn: https://github.com/tracel-ai/burnHeartMuLa: https://github.com/HeartMuLa/heartlibKreuzberg: https://github.com/kreuzberg-dev/kreuzbergOpenClaw: https://openclaw.ai/SIM: https://www.sim.ai/NocoBase: https://www.nocobase.com/Dify: https://dify.ai/

BenchmarkingGB10 vs. GH200: https://www.phoronix.com/review/nvidia-gb10-gh200Performance of llama.cpp on NVIDIA Grace Hopper GH200: https://github.com/ggml-org/llama.cpp/discussions/18005GPU benchmark: https://github.com/mag-/gpu_benchmarkOllama benchmark: https://llm.aidatatools.com/results-linux.phpPhoronix test suite: https://www.phoronix-test-suite.com/MLCommons: https://mlcommons.org/benchmarks/Artifical Analysis: https://artificialanalysis.ai/Lmarena: https://lmarena.ai/Livebench: https://livebench.ai/AMD vs NVIDIA Inference Benchmark: https://semianalysis.com/2025/05/23/amd-vs-nvidia-inference-benchmark-who-wins-performance-cost-per-million-tokens/Openrouter Rankings: https://openrouter.ai/rankingsEpoch.ai Benchmarks: https://epoch.ai/benchmarksNeuSight: https://github.com/sitar-lab/NeuSightInferenceMAX: https://inferencemax.semianalysis.com/NVIDIA HPC-Benchmarks: https://catalog.ngc.nvidia.com/orgs/nvidia/containers/hpc-benchmarks?version=25.09Contact

Email: x@GPTshop.ai

GPT LLC

122 Mary Street

George Town

Grand Cayman KY1-1206

Cayman Islands

Company register number: HM-7509

Free shipping worldwide. 3-year manufacturer's warranty. Our prices do not contain any taxes. We accept almost all currencies there are. Payment is possible via wire transfer or cash.

Try

Try before you buy. You can apply for remote testing. You will then be given login credentials for remote access. If you want to come by and see for yourself and run some tests, that is also possible any time.

Currently available for testing:

GH200 624GB

Apply via email: x@GPTshop.ai

Example use case 1: Inferencing MiniMax M2.1 229B, Xiaomi MiMo V2 Flash 310B, ZAI GLM-4.7 358B, DeepSeek V3.2 Speciale 685B, Moonshot AI Kimi K2.5 1T Thinking

Example use case 1: Inferencing MiniMax M2.1 229B, Xiaomi MiMo V2 Flash 310B, ZAI GLM-4.7 358B, DeepSeek V3.2 Speciale 685B, Moonshot AI Kimi K2.5 1T Thinking What is the difference to alternative systems?

What is the difference to alternative systems? Compute performance of one GB300

Compute performance of one GB300